tamuGPT

Overview

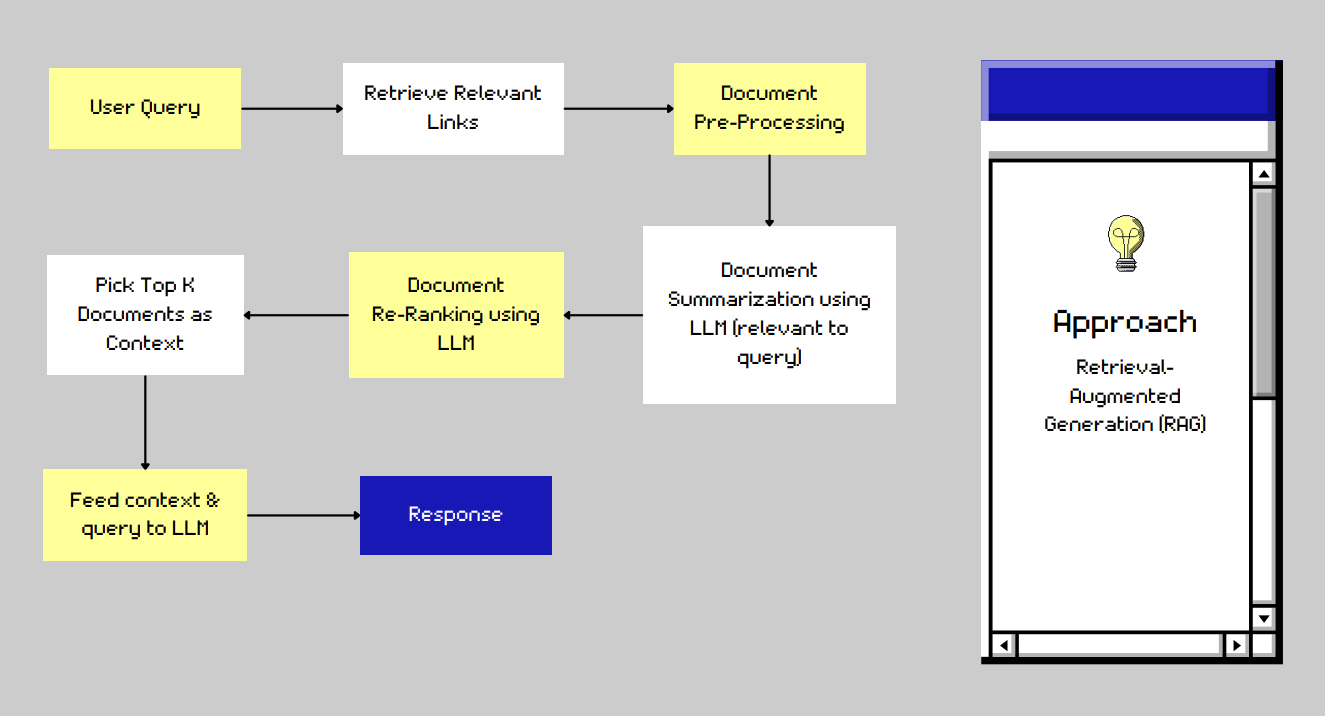

tamuGPT is a sophisticated chatbot from Texas A&M, implemented with Retrieval Augmented Generation (RAG) architecture. A workflow of the model is provided below.

Taking the user input:

- User Query: The process begins with capturing the user's query.

Leveraging Google Search:

- Information Retrieval with Google Search API: tamuGPT utilizes the Google Search API to retrieve a set of potentially relevant documents (e.g., top 10 links) that address the user's query.

Data Preparation:

- Preprocessing and Cleaning: The retrieved content undergoes pre-processing to remove irrelevant information such as headers, footers, and HTML tags.

Content Analysis with GPT-3.5:

- Summarization: The pre-processed content is then passed to GPT 3.5 model to summarize the relevant content.

- Reranking: The summarized content is re-ranked by GPT 3.5 model.

Response Generation:

- Response: Based on the user's query, the top k (e.g., top 7) ranked summaries, and the insights gained from accessing the original retrieved documents (through RAG), GPT-3.5 generates the final response.

- Source Attribution: The final response includes information about the sources used (URLs) allowing users to access the original content.

Depending on the requirements, GPT 3.5 is invoked with different templates to perform the ranking, summarization and answer the query based on the context.

Additional Information

🤖 Technologies:

- Retrieval Augmented Generation (RAG): Relevant context is provided to GPT 3.5 model allowing it to access the retrieved documents for better insights

- Google Search Integration: LLM is integrated with Google Search API to retrieve the top potentially relevant documents

- Summarization and Reranking: Summarization and Re-ranking is performed to organize the data chunks and obtain the context for the response generation

💪 Challenges:

- Choice of LLM: Choosing the ideal LLM for the summarization, re-ranking and context-integration tasks

- Search Speed: Striking a balance between accuracy and response time

- Computation cost: Require additional computational resources to design a custom or hybrid LLM

🚀 Future Scope:

- Source Mapping: In case of multiple answers, the current model presents a response with generalized source summary. This can be refined to perform a 1-to-1 mapping between multiple answers and the corresponding sources

- Expanding retrieval sources: Additional sources like databases, specialized archives or domain-specific repositories could be integrated to provide richer information for RAG to leverage